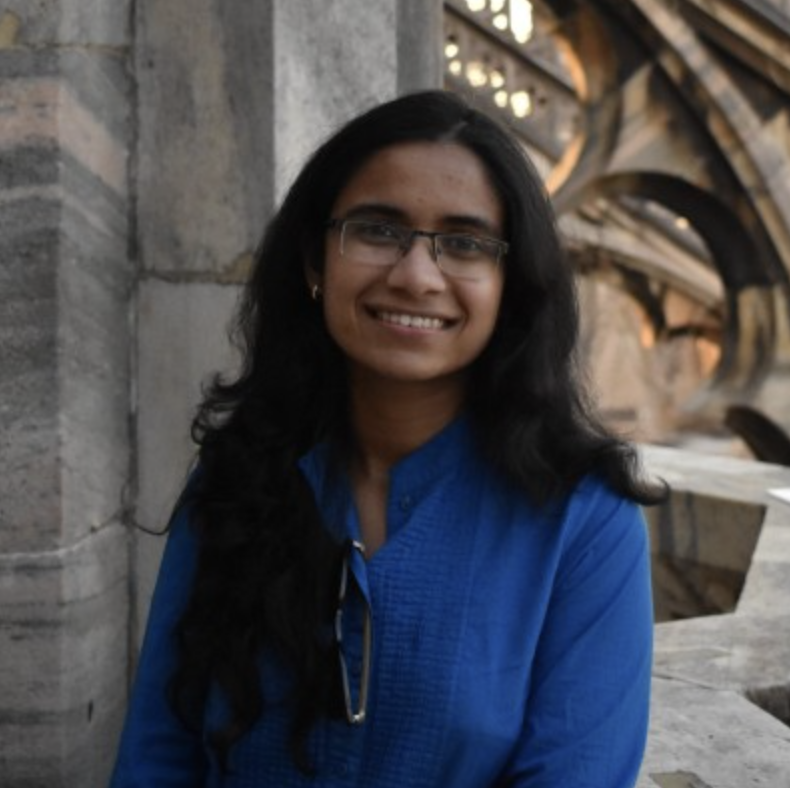

Thursday, October 9, IBM researcher Dr. Sanchari Sen came to Union College to guest lecture us on the magical world of artificial intelligence. Many of you probably know it as that free tool that writes essays with dash marks. The engaging talk I attended was called “Powering AI: Tackling the Compute and Energy Challenges Behind the Magic.” It is sponsored by the CS department and the seminar coordinator, Dr. Shruti Mahajan. What this engaging seminar title is referring to is how advanced AI has become, yet with a problematic excess of energy use. In case your dinner date ever brings up the topic of AI, read the following dumbed down article to get the “Royal Scoop” written in Layman’s terms. Let’s jump in!

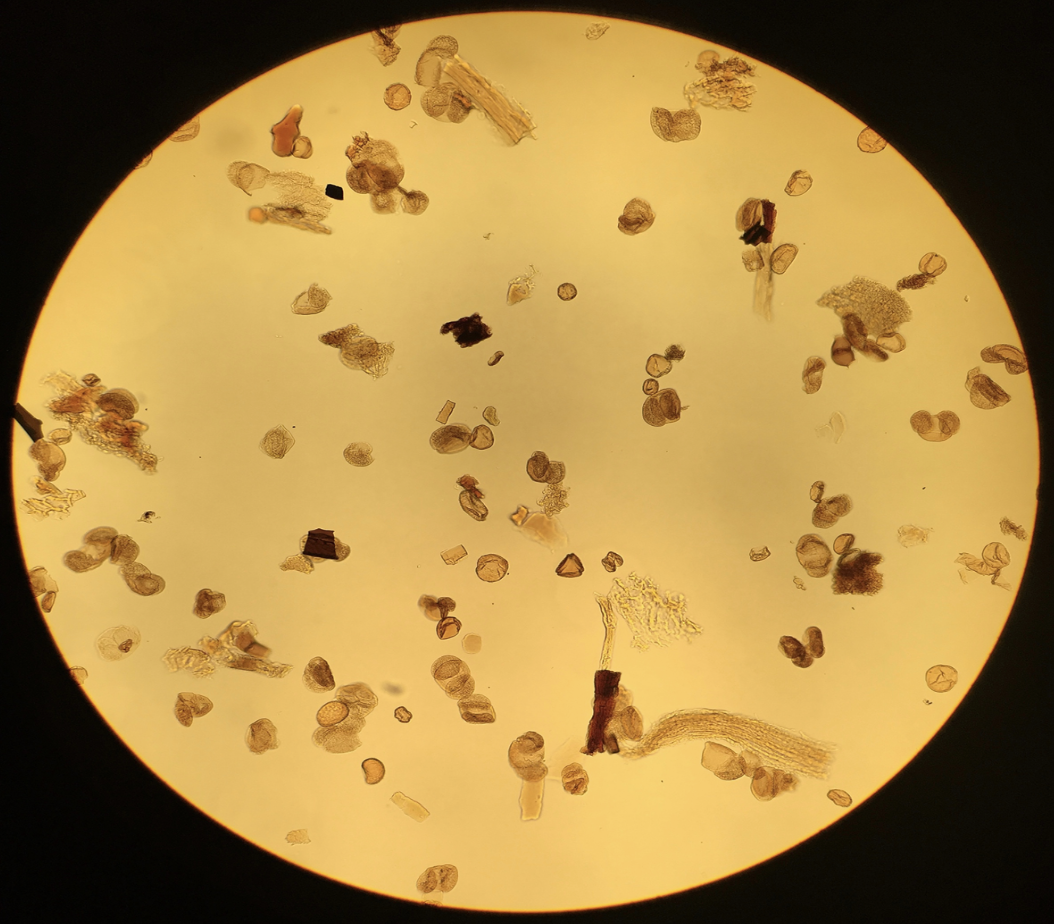

Think about it like this, AI doesn’t think the same way humans do. AI is really good at pattern recognition. It is a computer, after all, that deals with numbers like ones and zeros. Black and white. It goes up and down, left and right, unlike our brains. Our brains are much more complex – we think colorfully and our mind goes in oblique directions. Within AI are networks which act like calculators. Here are the two main networks you’d want to know: Transformer networks & Convolutional networks. Transformer networks are basically language network models that center mostly around what we say and type into ChatGPT or DeepSeek. Convulsion networks are image processing models that go behind the scenes of using AI and handle image-based tasks, like facial recognition filters or image generation. The best example for this image processing model would be something like that face app you deny using on your selfies. Essentially, each network involves creating something that requires billions of operations. The computer multiplies inputs, adds weight, and adjusts certain biases for getting the best possible outcome to your responses or questions. Think of it as a calculator on steroids.

What’s sad about these network models are their energy challenges. As Sen dutifully notes, these models consume so much energy. If you think about it, the energy cost of AI is so much that they could swindle an entire town of its electricity. Dr. Sanchari Sen’s research at IBM, aims to address this issue by reducing energy cost. Basically, there are data centers out there being overworked and this is not optimal for the long haul. For any of your math majors, think of it as an equation. Total energy cost equals the number of operations multiplied by the cost of each operation. The goal of reducing energy can be helped by reducing the number of operations and reducing the cost of each operation. This is where optimization comes into play….. or should I say…. Quantization.

Quantization may very well be the most optimal solution to reducing energy cost. The kind of AI models we see daily often use 32 bits to calculate our homework. Through significant research backed by IBM as well as a little bit of magic, it is possible to reduce those numbers to 16 bits or even smaller. Think of it as buying a car that uses less fuel for more mileage. Quantization is the conceptual idea of making AI models faster in that respect. When you reduce the bits, you reduce the electric bill and some town far far away can still keep its nightlight on. Luckily, that’s not the only way to trim the costs.

Pruning is something that scientists are doing to cut out arbitrary networks. Think of it as getting a fade from a barber. Computer scientists are giving AI models a low taper fade in order for them to run faster and smarter. Think about it like this: the smaller the network is, the faster the model produces and reaches higher performance. With low energy input and smaller networks, we can save a ton of small towns from reaching a 17th century aesthetic.

CPUs and GPUs handle the big picture items when it comes to task optimization. Large tasks for AI models get broken down into many smaller tasks in order to make the job done easily. Basically, whenever you go on a rant and go off on ChatGPT, there are many Oompa-Loompas called specialized accelerators that break down the job and process each part of your rant separately and instantaneously all at once. Research facilities like IBM have utilized specialized accelerators and mixed-precision processors that help make the AI models work faster. Through their research, IBM has aided technological advancements by making smarter chips that require less power and thus put less strain on the electrical grid. It is through these advancements that we get to see quicker responses and generations.

If you’ve gotten this far in the article, just know that you’re doing a good job. Learning about AI can feel daunting to some, but through sheer effort and will you can eventually see the magic behind it. In case you haven’t retained anything I’ve said, just remember that bit reduction can reduce energy cost, pruning and quantization can help chop arbitrary networks from AI models and GPUs and CPUs can help optimize faster generation.